For those of you who are curious about Artificial Intelligence, Models and LLMs but haven’t had a chance to learn about it in a structured or formal way, we have a series self-guided posts one you shouldn’t miss.

Generative AI has taken a boom in recent times. With the advent of Foundation models such as Large Language models (LLMs), generative AI has shown beyond creative outcomes. It’s amazing to see how far AI and machine learning have gone.

A human’s guide to Foundation Models & unlimited opportunities ahead

Transformers has become an essential prerequisite for every data scientist in their daily work. Familiarising oneself with its layers, architectures, inputs, and outputs is very important of being able to effectively work with them.

Quick guide to Transformer Architectures

The field of Large Language Models (LLMs) is currently experiencing rapid development, with a significant focus on exploring the capabilities to process long sequences more efficiently. We cover promising, latest AI architectures Mamba and RWKV in below post…

Achieving linear-time operations with shift in attention mechanisms in AI architectures – Mamba, Recurrent Windowed Key-Value

Let's revisit FMs

Foundation models serve as the base model for more specific models. A business can take a foundation model, train it on its own proprietary data and fine-tune it to a more specific task or a set of business domain-specific tasks.

Several platforms likes of Amazon, IBM, Google and Microsoft provide organisations with their framework for building, training AI models and deploying them. Sometimes, they don’t do well with some special latest data/topics or with new information.Large Language Models

LLM

Large language models (LLMs) fall into a category of these foundation models. Foundation models work with multiple data types. Generally, there are foundation models for specific data type and hence purpose. For example, Language models take language input and generate synthesised output. Of course, there are foundation models which can take multiple data types, they are multimodal, meaning they work in other modes besides language. Large Language Models a.k.a LLMs are the Foundation models for NLP.

Large language models can be inconsistent. The world of foundation model (and yes LLM) is frozen in time. Their world exists as a static snapshot of the world as it was within their training data. Sometimes, they nail down to the most appropriate answer to questions asked, but at other times they throw up random facts from their training data. LLMs know how words relate statistically, but not what they mean.

A solution to this problem is retrieval augmentation. The idea behind this is that we retrieve relevant information from an live, external knowledge and pass that information to our foundation model or LLM.

Why we need to ground LLMs?

World, Data and Content is moving fast

An LLM has it internal knowledge base. It creates this knowledge base based on training datasets. As the data, content is moving fast. As the new data flows in, the model’s knowledge base can quickly become obsolete. One solution is to fine-tune the model every minute or day…Find-tuning or re training the model is not feasible unless someone wants to spend millions or billions each time.

Hallucinations are there

Though, we will cover it in details in a separate article but at this point of time understand it like even if the answer looks 100% legit, you can never fully trust it.

Lack of reference links

Responses by LLMs need to be backed by references for making them for trustable and legit.

How it works?

RAG for LLM

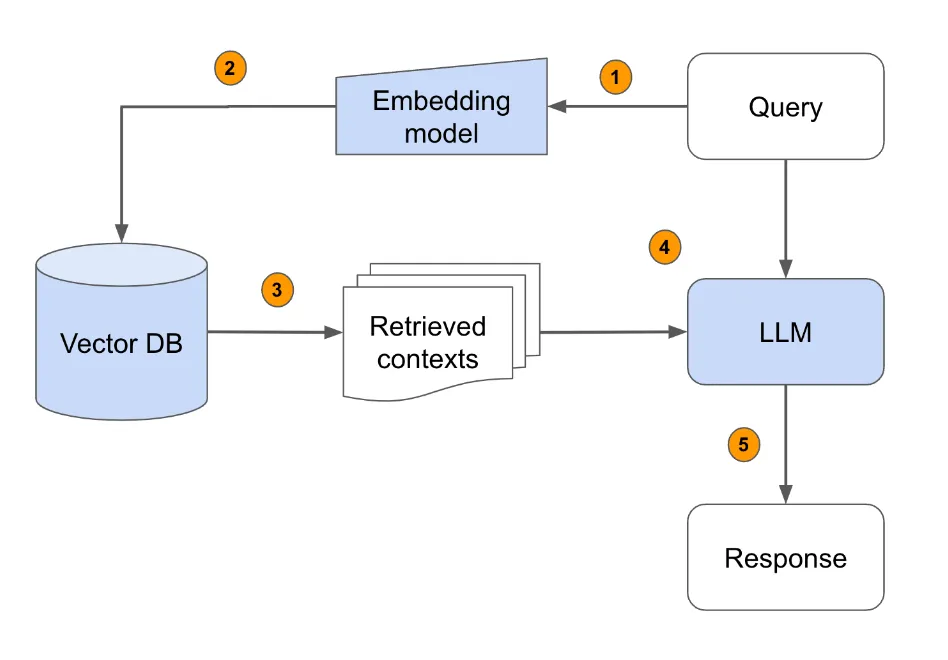

Retrieval-augmented generation (RAG) for large language models (LLMs) aims to improve prediction quality by using an external datastore at inference time to augment a richer prompt that includes some combination of context, history, and recent and relevant knowledge.

“Retrieval-augmented generation (RAG) is an AI framework for improving the quality of LLM-generated responses by grounding the model on external sources of knowledge to supplement the LLM’s internal representation of information. Implementing RAG in an LLM-based question answering system has two main benefits: It ensures that the model has access to the most current, reliable facts, and that users have access to the model’s sources, ensuring that its claims can be checked for accuracy and ultimately trusted.”

Retrieval and Generation

As the name suggests, RAG has two phases: retrieval and content generation.

In the retrieval phase, user prompt is considered and the function search for and retrieve snippets of information relevant to the prompt. This assortment of external knowledge is appended to the user’s prompt and passed to the language model.

In the generative phase, the LLM draws from the given augmented prompt and its internal representation of its training data to synthesise an more appropriate answer tailored to the user prompt.

Exploring the vast amount of information available on the internet can be overwhelming. But with the help of AI Text, I can easily filter and refine my searches to find exactly what I need in a matter of seconds.

Writing has always been a passion of mine, but sometimes I struggle with finding the right words to convey my message. Thanks to AI Text, I can now effortlessly generate high-quality content that resonates with my audience.

Writing has always been a passion of mine, but sometimes I struggle with finding the right words to convey my message. Thanks to AI Text, I can now effortlessly generate high-quality content that resonates with my audience.

The Process

So, how does it work?

As Meta calls it; answering with both closed and open book

take user prompt

Rather than passing the uuser input directly to the generator, send it to the vector search solution to find the relevant information

augment prompt

Once it has that relevant information it will construct a “prompt” that contains the question the user asked, the information received from the vector search

search & generate

augmented prompt is creted to make the LLM respond how you’d like. Once this is done, all of that information is sent to the LLM.

Insights and Updates

The Latest News, Trends, and Best Practices

Unlock high performance of Transfer Learning with transformer based Neuron architecture on Inferentia Neurons

We can accelerate our existing framework application with minimal code changes whether they are native PyTorch models or native Tensorflow models on Neurons.

Organising for Generative AI: why CEO needs to know this?

Generative AI is a subset of Deep learning, it uses artificial neural network, can process both labelled and unlabelled data using supervised, unsupervised and semi supervised methods. Generative Deep learning model, learn patterns in unstructured content, generate new data that is similar to data it was trained on.

A human’s guide to Foundation Models & unlimited opportunities ahead

Boom of Generative AI Generative AI has taken a boom in recent times. With the advent of Foundation models such

Quick guide to Transformer Architectures

Quick Refresher Rest assured, we’re not revisiting the Transformer model architecture and paper for the 100th time. However, this model

Achieving linear-time operations with shift in attention mechanisms in AI architectures – Mamba, Recurrent Windowed Key-Value

Rapid advancements in AI The field of Large Language Models (LLMs) is currently experiencing rapid development, with a significant focus

What Retrieval Augmentation Generation (RAG) offers to LLM?

Retrieval-augmented generation (RAG) for large language models (LLMs) aims to improve prediction quality by using an external datastore at inference time to augment a richer prompt that includes some combination of context, history, and recent and relevant knowledge.